IBM Mainframes (the few that are still around, but run some of the largest banks and airline reservation systems) does not use Unix time.

Windows does not use Unix time.

https://frameboxxindore.com/other/does- ... %2C%202038.

I do not know just how Windows stores time internally. It may have a similar problem.

MacIntosh computers may have a problem. I'm not a Mac guy, but it is my understanding that the recent generations of the MacOS are Linux-based.

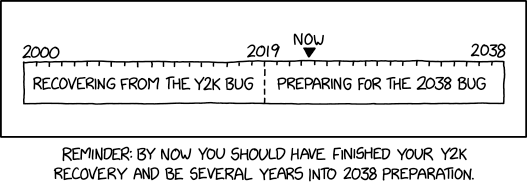

Back in 1999, a lot of people were afraid that cars would stop running and airplanes would fall out of the sky at midnight on Dec. 31, 1999.

The Y2K problem was a little bit different than the Y2038 problem.

Y2K was a problem because in the early days (sixties and seventies), disk storage was very expensive, so programmers often coded only two digits for they year. Even in 2001, a human looking at a screen or report, seeing a birthdate of 08/09/51 could easily understand that that meant 1951. The problem came in when you tried to do what I used to call "date arithmetic". That is, adding, subtracting or comparing two dates.

If someone began collecting social security at age 65 in 1990, having been born in 1925, when Jan. 1, 2000 rolled around and the social security administration's computers printed checks, they would subtract 25 from the current date of 00, resulting in the ludicrous (but completely legit to a computer) age of -25, which is obviously less than +65. No check for you!

I was one of the guilty ones. I began programming professionally in 1972, and I remember saying to a co-worker (or as Scott Adams calls them cow-orkers), "I sure hope I'm not still doing this in 2000". Being young and foolish, 28 years in the future seemed an eternity. Alas, I was still working in IT in 2000.

The Y2038 problem is built into the Unix/Linux operating system. I'm sure that many databases that have date and time stamps are coded using Unix time. In Y2K, we had to modify a few programs, mostly Cobol, to use four digit dates and recompile them. In Unix/Linux, you might find that you have to install the change to the OS and have every single program ready to go at the end of the epoch. If the OS's time rolls over from 2**31-1 to 0, and my program wants to calculate someone's age, the OS is going to tell me that the Unix time is a few hundred or a few thousand seconds after 1/1/1970. If someone's birthday is 1,900,000,000 seconds after the begining of the Unix epoch, and the program subtracts 1.9B from, say 5K, it will create truly ludicrous results.

I think that what they are going to have to do, is to create a second Unix date held by the OS. The new one, however would use 64 bits from 1/1/1970. Between now and 2038, an unmodified program, asking for the date, would receive the same 32 bit offset that it does now. That gives programmers 16 years to modify their programs to use the new date.

That is, the OS would maintain two dates, one as a 32 bit offset and the other as a 64 bit offset from 1/1/1970. For the next 16 years, the new date would be identical to the old date, except that it would have 32 leading zero bits. In 2038, the old date would roll over to 0 but the new date would keep on ticking.

I'm sure that 32 bits was selected because, back in 1970, many small computers, which is where Unix started, the standard was a 16 bit word, with an option of a "long integer" of 32 bits. Now, 64 bit words are standard, with some computers using a 128 bit word. Doing date arithmetic on 64 bit integers is a piece of cake.

I'm sure that the young turks at ATT, who first wrote Unix, suffered the same hubris that I did in 1972, so that contributed to the problem, as well.